One of statistic’s foundations lies in the fact you can add variances. Maybe you wonder a little bit, because the formula for the variance does not look like that at first glance. This article will show you the proof why and under which circumstances adding variances is a valid practice. Please check the information given in my articles on addition and multiplication of expected values, if you do not have collected experiences with it yet.

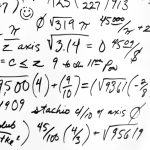

First, consider the definition of the variance for a random variable .

As we want to demonstrate that , let’s substitute

. It is valid to add expected values, so we replace

by

afterwards.

Unfortunately, multiplying out this mess is hard work, but we need to 😀 I did this below. Take notice that, in the second step, I splitted the whole expected value on the right-hand side.

It is not obvious, but first and the second summand are variances themselves! Therefore, further simplification gives:

I reorganized the right-hand side of the equation. The term we are working on can now be found on the right. As you know, we can take constant factors out of the expected value. For example becomes

, because

is constant in terms

. With this technique we get the following.

The term on the right is known as the covariance. Assuming that and

are independent (or at least uncorrelated), this term equals zero. Why? Because then you can make use of the multiplication rule:

That’s why you can add the variances of uncorrelated random variables. Heureka!

Leave a Reply