Those of you who already have tested for the variance of data from a normal distribution may have asked themselves how the link between normal variance and chi-squared distribution arises. Trust me: The story, which I will tell you, is an exciting one!

Simple explanation based on population mean

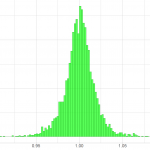

The chi-squared distribution with degrees of freedom is defined as the sum of independent squared standard-normal variables

with

.

Now, the population variance is given by

An estimator for the variance based on the population mean is

In order to demonstrate the relationship to the chi-squared distribution, let’s multiply with .

Dividing by gives a z-transformation. In turn, this results in independent squared standard-normal variables for each random observation

. But this is actually the definition of the chi-squared distribution! Hence, for a known population mean the proportion between sample variance and the real underlying population variance follows a chi-squared distribution with

degrees of freedom where

denotes the sample size.

Advanced explanation based on sample observations only

Being aware that you usually don’t know the population mean. So you might be interested in proofing the claim that the sample variance is also related to the chi-squared distribution.

We make use of the relationship

and can write the sample variance as

Multiplying with gives

Rearranging the equation gives

Introducing helps to distinguish the different terms. The equation now reads as follows:

and

, because these terms refer to the population mean

and therefore are independent. The summands of

, in contrast, depend on

. However,

is calculated from

and therefore you can at maximum manipulate

of the

without modifying the sum. As a consequence,

only has

degrees of freedom. According to Cochran’s Theorem

and also the three expressions degrees of freedom must be equal. That is:

,

and

.

But also by Cochran’s Theorem (similar to

). It follows

Hopefully this explanation helped you to get an idea why for the variance there is a relationship to the chi-squared distribution 🙂

(6 votes, average: 4.83 out of 5)

(6 votes, average: 4.83 out of 5)

Leave a Reply